Unicode Text and File Editor

UTF-8 and UTF-16 Encoding

Sections in this power tip:

- A brief overview of Unicode

- The difference between UTF-8, UTF-16, etc.

- Configure UltraEdit to properly open Unicode files

- Copying and pasting Unicode data into UltraEdit

- Understanding BOMs (Byte Order Markers)

- Configuring UltraEdit to save Unicode/UTF-8 data with BOMs

- Converting Unicode/UTF-8 files to ASCII files

While UltraEdit and UEStudio include handling for Unicode files and characters, you do need to make sure that the editor is configured properly to handle the display of the Unicode data. In this tutorial, we’ll cover some of the basics of Unicode-encoded data and how to view and manipulate it in UltraEdit.

A brief overview of Unicode

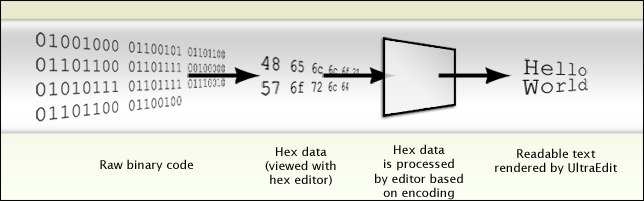

To understand how Unicode works, you need to first understand how encoding works. Any plain text file containing data that you open and edit in UltraEdit is displayed using an encoding. In the simplest terms, encoding is how the raw binary data of a file (the 0s and 1s that comprise a file on the disk) is interpreted and displayed in the editor as legible text that can be manipulated with your keyboard. You can think of encoding as a type of “decoder ring” for a code language. Since we know that everything on our computer is comprised of 0’s and 1’s (think The Matrix), you can visualize how encoding works by looking over the following diagram.

Unicode is an encoding developed many years ago by some intelligent developers with the goal of mapping most of the world’s written characters to a single encoding set. The practical benefit of this aim is that any user in any location can view Chinese scripts, English alphanumeric characters, or Russian and Arabic text – all within the same file and without having to manually futz with the encoding (code page) for each specific text. Prior to Unicode, you would probably have needed to select a different code page to see each script, if the script even had a code page and a font that supported it, and you wouldn’t be able to view multiple languages / scripts within the same file at all.

Tim Bray, in his article “On the Goodness of Unicode”, explains Unicode in simple terms:

The basics of Unicode are actually pretty simple. It defines a large (and steadily growing) number of characters – just over 100,000 last time I checked. Each character gets a name and a code point, for example LATIN CAPITAL LETTER A is 0041 and TIBETAN SYLLABLE OM is 0F00. Unicode includes a table of useful character properties such as “this is lower case” or “this is a number” or “this is a punctuation mark”.

(Note: As of this update to this power tip, on Nov 2, 2018, there are exactly 137,374 characters in Unicode.)

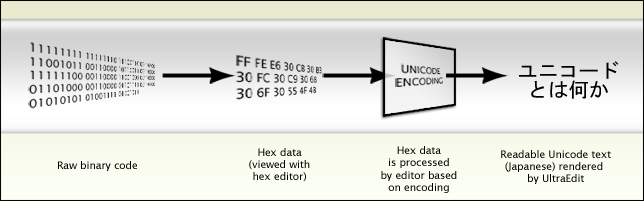

So, with this knowledge in mind, an updated diagram for how Unicode encoding works is shown below:

Every encoding works the same way as shown in the above diagram, but each encoding will (usually) give different results of what is displayed in the editor. Unicode is a very robust encoding that displays most writeable languages in the world today.

The difference between UTF-8, UTF-16, etc.

Because Unicode encompasses hundreds of thousands of characters, multiple bytes are required for each character. Why? Well, as you might already know, in the world of computers, one byte is composed of 8 bits. A bit is the most basic and smallest piece of electronic data and can either be a 0 or a 1 (or “off” / “on”). So this means a single byte can be one of 256 possible combinations of bits. This means that you can only support up to 256 characters with an encoding that uses a single byte for each of those characters. Obviously you’d need exponentially more combinations than 256 to support all characters in the world.

To meet this requirement the developers of Unicode implemented a two-byte character system, but even that didn’t provide enough possible combinations for all the world’s characters! But solving this problem wasn’t as simple as just increasing it up to three or four bytes per character because of memory and space considerations – if each character in a plain text file requires 4 bytes of disk space (or memory space, if it’s loaded into memory), you’re essentially quadrupling the amount of space that data needs to be stored! That’s not efficient at all.

UTF-16 was developed as an alternative, using 16 bits (or 2 bytes) per character. If you’re doing the math, you’ve already realized that the space calculations still aren’t great, and there is still potential for a lot of wasted space with UTF-16 encoded data especially if you’re only ever using characters that use just 8 bits (or 1 byte). Additionally, because UTF-16 relies upon a 16-bit character, many existing programs and applications had to add special, separate support (essentially duplicating all their text handling code) for UTF-16 because they were designed to support 8-bit characters. If text came into the program that was in some other encoding, it would be processed by the normal text handling code the developer had written for 8-bit characters. If it came in as UTF-16, it would go through special UTF-16 code – if the developer had even written code for it! As you can imagine, writing code to handle these two different types of byte architectures for character encoding could make for quite messy code.

Fortunately, a version of Unicode called UTF-8 was developed to conserve space and optimize the data size of Unicode characters (and subsequently file size) without requiring a hard-and-fast allocation of 16 bits per character. UTF-8 stands for “Unicode Transformation Format in 8-bit format”. Yep, you guessed it – the big difference between UTF-16 and UTF-8 is that UTF-8 goes back to the standard of 8 bit characters instead of 16. This means it’s (mostly) compatible with existing systems and programs that are designed to handle a byte as 8 bits.

Additionally, UTF-8 still encompasses the Unicode character set, but its system of storing characters is different and improved beyond the “each character gets 16 bits” model of UTF-16. UTF-8 assigns a different number of bytes to different characters – one character may use only one byte (8 bits), while another might use four. The most commonly used characters have been assigned single- and double-byte combinations, which means that for most people the size of data doesn’t get too much bigger with UTF-8.

The only drawback to this is that more processing power is required on the system interpreting data encoded in UTF-8, since not every character is represented by the same number of bytes. But this extra processing is rather negligible to performance overall.

There are other Unicode encodings such UTF-32 and UTF-7, but UTF-8 is the most popular and widely-used Unicode format today. Most SQL databases and websites you see are encoded in UTF-8, and in fact, in 2008 Google said that UTF-8 had become the most common encoding for HTML files. So if you ask us, we recommend the UTF-8 encoding format when working with Unicode in UltraEdit and UEStudio as well!

For more information on Unicode, read over the following articles:

- “

- ” by Tim Bray

- “

- ” by Joel Spolksy

And of course, be sure to visit the official Unicode site for more detailed information and Unicode updates.

Configure UltraEdit to properly open Unicode files

Whew! Now that we’ve gotten the history and fundamentals of Unicode out of the way, how should we configure UltraEdit to handle Unicode text files?

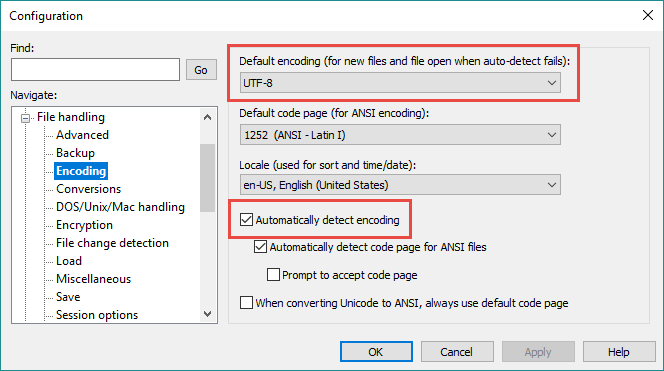

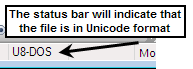

If you have Unicode files that you’d like to open in UltraEdit, you’ll need to make sure you set UltraEdit to detect and display Unicode. All of this can be configured in Advanced » Settings » File Handling » Encoding. There are 2 settings here that are important for Unicode handling in UltraEdit.

Default encoding (for new files and file open when auto-detect fails)

This setting allows you to set the default encoding for new files and the encoding UltraEdit should select when it can’t automatically figure out the encoding originally used to create the file. In other words, what should UltraEdit “assume” the file is, if it can’t figure it out otherwise? If you deal a lot with Unicode data, we recommend setting this to UTF-8. Remember, the de facto standard for Unicode seems to be UTF-8, and more and more of the computing world is moving in that direction.

The awesome thing about UTF-8 is that its first 256 characters match, byte-for-byte, the 256 characters of the most popular ASCII character set (ANSI 1252). So if you have a non-Unicode file with regular ASCII characters that is interpreted by UltraEdit (or any other application) as UTF-8, you probably won’t even be able to tell the difference.

Automatically detect encoding

Make sure this option is checked, otherwise UltraEdit won’t do anything to try and automatically detect the encoding of the files you open. This means that files that are obviously UTF-8 or UTF-16 would look like scrambled, unintelligible text with the option disabled.

Copying and pasting Unicode data into UltraEdit

You may want to copy and paste Unicode data from an external source to a new file in UltraEdit. In many earlier versions of UltraEdit, if you tried this, you may have seen that the characters were pasted into UltraEdit as garbage characters, little boxes, question marks, or something completely different than what you were expecting. That happened because new files in UltraEdit are by default created with ASCII encoding, not Unicode/UTF-8. Refer to our diagram above; the hex data is correct for the Unicode characters, but because the encoding has not been set properly, the result is incorrect.

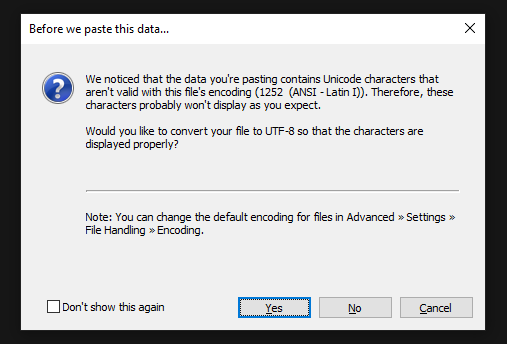

The good news is that starting with UltraEdit v24.00 / UEStudio 17.00, UltraEdit now detects if Unicode characters are being pasted into a non-Unicode file and prompts you to convert the file before doing the paste.

In previous versions, you would need to set the correct encoding for the new file, before actually pasting in the Unicode data. This could be done by going to File » Conversions and selecting ASCII to UTF-8.

Understanding BOMs (Byte Order Markers)

A Byte Order Marker (BOM for short) is a sequence of bytes at the very beginning of a file that is used as a “flag” or “signature” for the encoding and/or hex byte order that should be used for the file. With UTF-8 encoded data, this is normally the three bytes (represented in hex) EF BB BF. The BOM also tells the editor whether the Unicode data is in big endian or little endian format. Big endian Unicode data simply means that the most significant hex byte is stored in your computer’s memory first, while little endian stores this in memory last. BOMs are not always essential for displaying Unicode data, but they can save developers headaches when writing and building applications. The BOM is one of the first things UltraEdit looks for when attempting to determine what encoding a file uses when it’s opened.

If a file contains a UTF-8 BOM, but the application handling the file isn’t built to detect or respect the BOM, then the BOM will actually be displayed as part of the file’s contents – usually junk characters like “” or “ÿ” (the ASCII equivalent of the otherwise-invisible BOM. That’s why it’s so important to have a text editor like UltraEdit that can properly detect and handle BOMs.

If you’re opening files in UltraEdit and seeing these “junk” characters at the beginning of the file, this means you have not set the above-mentioned Unicode detection options properly. Conversely, if you’re saving Unicode files that others are opening with other programs that show these junk characters, then the other programs are either unable or not configured to properly handle BOMs and Unicode data.

More information on BOMs and the different endians/UTF formats is available on the official Unicode website.

Configuring UltraEdit to save Unicode/UTF-8 data with BOMs

If you’d like to globally configure UltraEdit to save all UTF-8 files with BOMs, you can set this by going to Advanced » Settings » File Handling » Save. The first two options here, “Write UTF-8 BOM header to all UTF-8 files when saved” and “Write UTF-8 BOM on new files created within this program (if above is not set)” should be checked. Conversely, if you do not want the BOMs, make sure these are not checked.

You can also save UTF-8 files with BOMs on a per-file basis. In the File » Save As dialog, there are several options in the “Format” drop-down list box for Unicode formatting with and without BOMs.

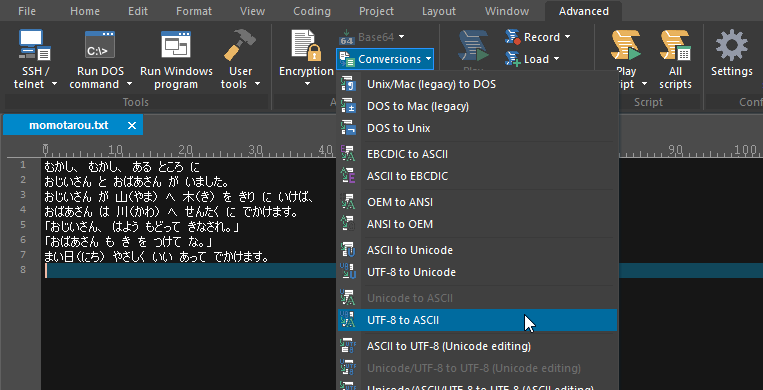

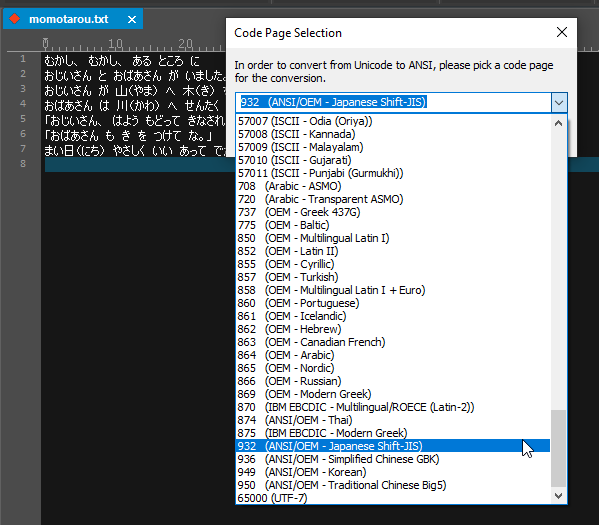

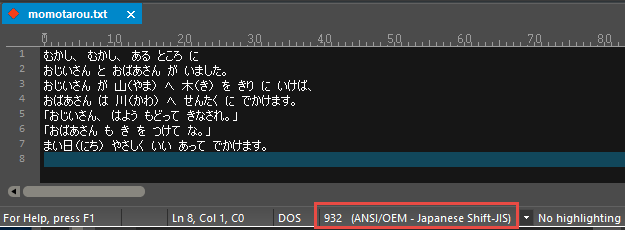

Converting Unicode/UTF-8 files to ASCII files

You might run into a situation where you need to convert a Unicode format encoded file into regular ASCII. Perhaps you have some old program or application that wasn’t designed to handle Unicode types of encodings. The good news is that UltraEdit provides a way for you to easily convert Unicode-based files to regular ASCII files. This is a very simple process. All you need to do is go to the Advanced tab and click the Conversions drop down, then choose the conversion option that matches what you’re wanting to do.

For example, if you have a UTF-8 file containing Japanese characters that you’d like to convert to ASCII, choose “UTF-8 to ASCII” in this drop down.

You can also convert the other way; for example, from ASCII to UTF-8. In this case you don’t need to select a code page since Unicode eliminates the need for code pages, because it contains all possible characters.

To sum it all up…

Unicode is a very complex system with thousands of characters, but it has been refined and polished to be easily accessed and used by anyone. The world has responded, moving towards UTF-8 as the standard for computing. To keep in step with technology, make sure you’re using a text editor like UltraEdit that can leverage the power and flexibility of Unicode!